Back to the basics now, especially since it seems as though there is a lot of emphasis on letting the tools do all the work for us that we forget how to do it ourselves when we are lacking the tools. This time we’re going to walk through the process of locating a deleted file on a hard drive. In this case, I’m going to be using a deleted file but, of course, this same process can be used for locating information for non-deleted files off a disk image as well. To save a little time, I’m going to be using some utilities from The Sleuth Kit, though we could also do the same thing by hand. I should also mention at this point that one of the reasons for writing this up is that I am working through a file systems chapter in my next book on Operating Systems Forensics, due to be published early next year by Syngress/Elsevier. It helps to understand the structure of the file system format so you can find data on the disk and know where to look for it.

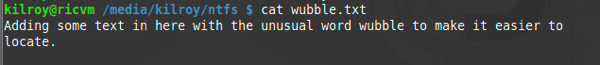

The first thing I need to do is create a file I want to use on an NTFS partition. I’m going to be doing this from a Linux system, though the Sleuth Kit utilities work on other operating systems as well like Windows. Since I want to acquire a disk image, I am going to use Linux so I get dd. I am going to create the file with a word that isn’t likely to show up anywhere else on the file system in order to minimize the number of hits I get when I go looking. You can see the contents of the file below.

I need to grab an image of the file and I may as well also capture a cryptographic hash at the same time since it’s just good practice. I’m going to use dd to capture the image. So, in my case, I’m using dd if=/dev/sdb1 of=ntfs.dd. I want to capture the whole disk so I am not setting a block size or setting any count. I want it to run until it runs out of disk to copy. Then I’m going to grab a cryptographic hash of the resulting image. When I’m all done, I get 2d59270e187217c3c222fc78851a1ebe91e3f8ec for my SHA1 hash on a disk image that is 150M in size. For the purposes of this exercise, I left the image small.

After deleting the file from the disk, I want to figure out where the data is. There are a couple of ways of accomplishing this using the Sleuth Kit tools. The first is to look it up by the file name. In reality, when you delete a file, it’s not gone from your drive or partition. As a result, there are still references to the file name sitting out there on my partition. One TSK tool I can use to find the file by the filename is ifind. ifind will search your file system for a reference to the filename or you can look up the metadata for a file based on the data unit you provide. In this example, I’m going to use ifind to look for the filename and then I’m going to use another TSK utility to pull the data out of the address on the disk that ifind has provided for me. You can see what that looks like below.

That provides me with the contents of the file and you can compare the results with what we saw when I used cat to display the file. ifind looked in the image file ntfs.dd for a filename called wubble.txt and it returned a data block of 73, which I then used to pass into icat to get the contents of block 73. This assumes we know the name of the file or that the name of the file is still available to be searched on. What I may have is just a chunk of text that comes out of the file. I can use another utility to go looking for the file based on just a word search. Since I’m looking through a binary file with the image capture, I need to do something special with grep in order to figure out the offset where I can find the text I’m looking for. I am going to tell grep to give me a binary offset and look through the whole file, so I’ll be using grep -oba to search my image file for the word wubble. You can see the results below.

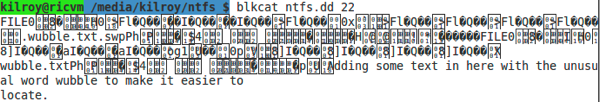

It looks like we have several hits in the image. The number on the left hand side is the byte offset. Since I have that in bytes, I need to figure out what cluster that is going to be in so I can use the TSK tools, which are block or cluster-based. As a result, I need to do a little math but I need to know what my cluster size is first. Fortunately, I can use fsstat on my image and get my cluster size. Of course, I could also use a hex editor and do it the really old-fashioned away but this should be adequate for our purposes. If I divide 91551 by my cluster size of 4096, I end up with 22 and some change. That tells me that the data is going to be in cluster 22, so I can use the TSK tool blkcat to get the contents of block/cluster 22. You can see the results of that below.

You can see a lot of funny looking characters. That’s because what we have here is an entry in the Master File Table so there is a lot of binary data and when you try to convert a byte that wasn’t meant to be a character into a character, you end up with values that don’t translate into something that looks right. You can, though, see the text of the file and that’s pretty common for an NTFS entry. Since it’s a very small file, the contents of the file was simply stored as an attribute of the file rather than taking up a data block somewhere else on the file system. You can also see the filename in the middle of all of that content. You can also see the value FILE0. This indicates that the drive was formatted with Windows XP or something newer. Since I used a Linux system to format it, the formatting utility just used conventions from a more recent version of Windows and NTFS.

We used a lot of TSK utilities to do this but we could just as easily stick with standard UNIX utilities to perform the work. Using the output from grep, we can see that we need to be 22 blocks into the file system in order to get the data we are looking for out. We can easily use dd to extract that information and then use xxd to view it in a hexadecimal dump. Using dd, we would set the block size to the block/cluster size of 4096 and then skip 22 blocks or clusters, grabbing only one. So, we could use dd if=ntfs.dd of=caught.dd bs=4096 count=1 skip=22 to grab a single block from the image file we have. Once we have the 4096 byte cluster, we can just use xxd caught.dd to view the results in a hexadecimal dump and see that we have the MFT entry for the file wubble.txt.

As a new guy in the field of digital forensics, I haven't yet determined how to explain the garbled data that is displayed when it wasn't meant to be displayed. I don't know how relevant my discovery of this is, but you summed it up perfectly...albeit not your main point!

ReplyDelete"You can see a lot of funny looking characters. That’s because what we have here is an entry in the Master File Table so there is a lot of binary data and when you try to convert a byte that wasn’t meant to be a character into a character, you end up with values that don’t translate into something that looks right."

Thank you for that!