Data carving, for those uninitiated in the arcane ways of forensic investigators or just technology geeks who like screwing around with things, is the process of extracting files out of a large pile of bits. You may want to do this to pull these files out of hidden areas on the disk or you may want to recover deleted files. You may also just want to see if you can do it just for the fun of it. There are a lot of different ways of carving data out of a disk and I’m going to walk through one way using only tools that you can find on your average Linux distribution. So, data carving the old fashioned way.

The Setup

First, I’m using virtual machines which makes life a little easier when it comes to shuffling disks around and making them small for the purposes of imaging them. I’m going to be using a disk image, though you could also use a raw disk just as easily and the process would be the same. While I created the disk inside a Windows virtual machine, I imaged it from a Linux VM using dd. The first thing we want to do is find some files we want to carve out. Since I created the disk, I know there are JPEG images on it. Before you go digging for gold or data, you have to know what it is you are looking for. While I’m looking for a JPEG image, I have to know what that JPEG image looks like before I can go searching bits and bytes. It’s not like I can tell the system to go looking for a picture of my hot girlfriend in a bikini. You’d have to know some sort of digital pattern.

Fortunately, when it comes to JPEGs, I happen to know that there are some key markers I should be looking for. While there are specific byte patterns that start and end the file, it’s a bit easier to start off looking for a string and I know that JPEGs have the string JFIF in their headers, so I have a starting point. I can search the disk for the ASCII pattern JFIF. Once I find that pattern, I can isolate the file and extract it. Again, nothing up my sleeve other than the usual Linux command line suspects that you’d find in any distribution you can find.

The Carving

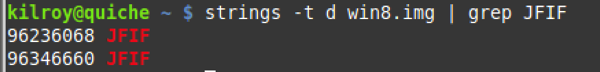

The first thing is to go looking for the string JFIF since I know it will be in the header. I’m going to use the Linux/UNIX strings command to search for it but since I know it’s going to be there, searching for it isn’t enough. I also need to know where it’s going to be. As a result, I am going to have to tell strings I need to know the byte location within the file. To do that, I use the option -t with a parameter of d. -t says print the offset and d says print it in decimal.

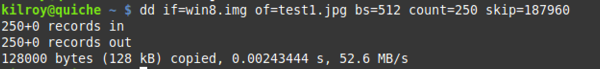

Now I have some byte locations but I need to do a little math to help me figure out where I need to look. I could start at that byte and start grabbing but I actually need some bytes before it as well since that’s not actually the beginning of the file. As a result, I’m going to figure out what sector that byte is in. In order to do that, I have to divide by 512 since a sector is 512 bytes. When I divide 96236068 by 512 I get 187961. That’s the sector I’m in. The file system is actually logically organized into clusters that are larger than a single sector but I don’t need to worry about what cluster I’m in at this point. All I need to know is the sector. I can now use dd again to extract a chunk of the disk image that I think will correspond with the location of this file. I don’t know how big it is so I’m just going to grab a decent sized chunk of the image and then I can whittle from there once I find the end.

You’ll notice that I skipped 187960 blocks (sectors) before I started capturing my output. The reason for that is this is zero based and I need to get the beginning of the sector that the offset I found is in. As a result, I reduce my number by 1 and use that instead. The very first bytes I should see at the beginning of the JPEG are FF D8. That indicates the beginning of the JPEG header. If I use xxd to look at the resulting file I got from the dd capture, I can see that my first two bytes are in fact FF D8.

0000000: ffd8 ffe2 021c 4943 435f 5052 4f46 494c ......ICC_PROFIL

0000010: 4500 0101 0000 020c 6c63 6d73 0210 0000 E.......lcms....

What I need to do now is locate the end of the file so I can figure out where I need to truncate it. I know at this point that I’m looking for the byte pattern FF D9 because that is the byte pair that indicates the end of a JPEG file. I’m going to use a hex editor to go looking for that byte pair so I can find the offset in the file where I need to truncate. In the image below, you can see the cursor indicating the beginning of the byte pattern. By counting over, I see the image ends at offset 1AD09. Now I know where to truncate the image.

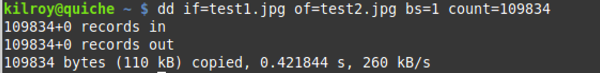

While I could truncate it in the editor, I can also use dd again to just extract those bytes that I want and write it out to a new file. First, though I need to convert 1AD09 from hexadecimal to decimal. I can use a simple programmer’s calculator that’s included with my operating system and let it do the conversion for me. I end up with 109833. I want to make sure I get that position as well, so I’m going to grab 109834 bytes from the beginning of the JPEG and write it out to a new file.

When I look at the hex output from the file, again using xxd, I can see that the last two bytes are in fact FF D9.

001acf0: 3305 30cc 8cb1 1b94 cb7f 8e25 ccba f794 3.0........%....

001ad00: f32e 8d18 25c0 6b13 ffd9 ....%.k...

I can now open the file up in an image editor or viewer and see the result. Of course, what we’ve done will work for any JPEG. In order to carve out other file types, you would need to know the specific characteristics of that file to be able to look for patterns in the disk.

Conclusion

You’ll have noticed that I searched for a string rather than the byte pattern. The reason is that I can’t search directly for the byte pattern without doing something in the middle like converting the disk to a hexadecimal representation and then looking for the byte pattern. I could also load up the disk in a hex editor to find the pattern I was looking for. If you have a large disk, this can be time consuming and also memory consuming. Large files may take much longer to work with in that way. Strings is convenient because I can look for a string and also have strings print the offset in the file for me where the string was located. The offset is really the most important part since it indicates where in the disk I need to be looking. Obviously, it would have been easier to look for FF D8 but those are non-printable characters that I couldn’t represent by typing so that I could search for them.

I could also, if I were in a programming frame of mind, write a program that would go looking for a hex pattern for me and I may have to do that if I can’t find a string pattern to look for first. Fortunately, there are tools that will go carving files out of a disk for you. In fact, there are a lot of them. Doing it manually, though, can give you an appreciation for what’s involved when those tools have to go grubbing around through a lot of bits and bytes looking for short byte patterns.

Very cool. I've thought about this problem from time to time in the context that the data might not be contiguous on disk. What is the mechanism by which the sectors are logically linked together when discontinuous? Wouldn't that case require a different approach?

ReplyDeleteYes, it would require a different strategy. Modern filesystems generally work to keep clusters contiguous as much as possible and since this was a new filesystem, any file written would get contiguous clusters. In the case of larger files where the clusters may not be contiguous, you'd have to chase backwards through the filesystem by finding the cluster that starts the file and then following the directory entry to all of the clusters that belong to the file. I did this without any involvement of the filesystem itself. In some cases, as in a situation where the file has been deleted, this may be the best you can do and you get what you can from it.

ReplyDeleteTo answer your question about the mechanism by which the clusters (a chunk of contiguous sectors) are linked together logically to create a file, it's handled at the filesystem level. The specifics depend on the filesystem. In the case of NTFS, for example, you would use the Master File Table and then the directory entry objects to find all of the clusters. In the case of a Linux filesystem, you would have a list of inodes and that list of inodes may actually end up being a tree with direct links and indirect links. Entertaining, huh?

Totally. I used to know this but those sectors get soft after 20 years of neglect. My real question is: is it the directory entries that link the sectors, or is there a pointer at the end of each sector linking to the next. I could Google that, but you are far more entertaining.

ReplyDeleteThe directory entries will have the list of clusters each file uses. Depends on the filesystem as to how that is implemented, however. FAT uses different methods than NTFS as an example.

ReplyDelete